AWS Lambda Layers

AWS Lambda framework is one of the most used services consumed by AWS customers. It is used to build event-driven architecture and serverless architecture applications. It supports various different languages like Java, Python, NodeJS, and many more to build Lambda Function. However, choosing the right language and managing the dependency is very critical as it may affect the size of the package and eventually the load time of the Function while starting instances. AWS Lambda layers is one of the best ways to reduce the size of the deployment packages. These Lambda layers can be for custom runtimes, libraries, or other dependencies.

Diagram placeholder

In this article, we would be going through deep into AWS Lambda framework packaging, Lambda layers working, and best practices around the Lambda layers.

AWS Lambda Framework Packaging

AWS Lambda works with many languages like Java, Python, NodeJS, and so on. A Lambda Function consists of the compiled code, script, and the dependencies it needs to run the code. To deploy this code to the AWS cloud, you need to ZIP the code and it's called a deployment package.

You can upload the package directly to the Lambda framework if the deployment package’s size is less than 50 MB, you must first use Amazon S3 to upload the package and then deploy it to the Lambda service.

Now, the problem with deployment packages is that over a period, it will keep adding more and more dependencies as part of code that causes maintenance overhead. For a small change in a dependency’s code, Function’s code has to be touched, re-packaged, and tested.

Another point is that the more the code you write, the more shared code will be developed that may be used across several Functions. To share it, the AWS Lambda Layers feature has been launched.

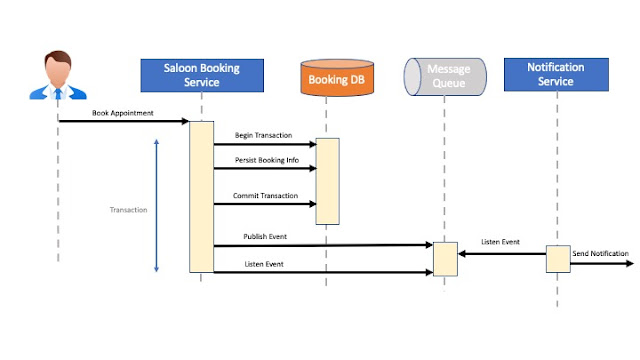

How AWS Lambda Layers Work

Lambda Layers provides a mechanism to externally package dependencies that can be shared across multiple Lambda functions. This allows Function to reuse the code already written. Lambda layers reduce lines of code and size of application artifacts.

AWS Lambda Layers can be managed through AWS CLI and APIs. However, AWS has added the support of Layers in AWS SAM framework and AWS SAM CLI that is being used for packaging the Lambda Function code.

A Lambda Function can use up to 5 layers max. The max size of the total unzipped size of the function and all layers can't exceed 250 MB. You need to keep a watch on AWS Limits that is continuously changing to accommodate all new requirements.

When a Lambda Function ( with a Lambda layer) is invoked, AWS downloads the specified layers and extracts them to the /opt directory on the execution environment of the Function instance. Each runtime then looks for a language-specific (NodeJS, Java, Python, etc..) folder under the /opt directory.

You can create and upload your own Lambda layers and publish it for sharing with others. You can implement an AWS managed layer such as SciPi, or you can grab a third-party layer from an APN Partner or other reliable sources. Below is a typical workflow for a Lambda layer:

Diagram placeholder

Including Library Dependencies in a Lambda Layer

Each Function will have one or more runtime dependencies that can be moved out of the Function code by placing them in a Lambda layer. To include libraries in a layer, place them in one of the folders supported by your runtime, or modify that path variable for your language.

For example: Node.js – nodejs/node_modules, nodejs/node8/node_modules (NODE_PATH)

Lambda runtimes ensure to include /opt directory in paths so that your function code has access to libs that are included in Lambda layers.

AWS Lambda Permission for Layers

AWS provides Identity and Access Management (IAM) to manage access to Functions and Layers. Layer usage permissions are managed on the resource. To configure a function with a layer, you need AWS Lambda permission to call GetLayerVersion on the layer version. You can get this permission by configuring your user policy or from the Function's resource-based policy. A Lambda layer can be added to another account as well by providing permission on your user policy. Also, the owner of the other account must grant your account permission with a resource-based policy.

Below is a command add-layer-version-permission that is used to add the layer usage permission:

aws lambda add-layer-version-permission --layer-name log-sdk-nodejs --statement-id xaccount

--action lambda:GetLayerVersion --principal 110927634125 --version-number 1 --output text

Permission is provided at the layer version level so you have to repeat this step for each time you add a new version for the layer.

How Lambda Layers Work in AWS SAM CLI

AWS SAM and its CLI are used to replicate the Lambda service environment in local and enable testing before moving the code to the AWS cloud. To enable the Lambda layers to support, it downloads all the configured layers and caches them in the local environment. You can use –layer-cache-basedir flag to specify the target directory to store the local cache of the layer.

Downloading of layers happens when the first time you run either sam local invoke or sam local start-lambda or sam local start-api commands. To refresh the layer cache, you can use the –force-image-build flag.

The AWS::Serverless::LayerVersion resource type is used in the SAM template file to create a layer version that you can reference from your function configuration.

Below is an example of a SAM template for a NodeJS application that is using plain-nodejs-lib library as a layer.

AWSTemplateFormatVersion: '2010-09-09'

Transform: 'AWS::Serverless-2016-10-31'

Description: An AWS Lambda application for XRay demo.

Resources:

function:

Type: AWS::Serverless::Function

Properties:

Handler: index.handler

Runtime: nodejs12.x

CodeUri: function/.

Description: Call the AWS Lambda API for XRay demo

Timeout: 5

# Function's execution role

Policies:

- AWSLambdaBasicExecutionRole

- AWSLambdaReadOnlyAccess

- AWSXrayWriteOnlyAccess

Tracing: Active

Layers:

- !Ref libs

libs:

Type: AWS::Serverless::LayerVersion

Properties:

LayerName: plain-nodejs-lib

Description: Dependencies for the plain nodejs app.

ContentUri: lib/.

CompatibleRuntimes:

- nodejs12.x

Things to Remember for Lambda Layers

Though Lambda layers play a great role to distribute your code and share with others, there are few things to keep in mind:

For static languages such as Java, the compiler needs to have all the dependencies at compile time to build the JAR. That won’t be an easy integration.

You need to be careful while using the Lambda layer version shared by third parties as first they might have malware, vulnerabilities; second, you won’t have control on their SDLC so if they plan to remove the version that you are using in production and you have a need to upgrade your code, you won’t get the same layer version in your environment and will cause a failure.

Lambda layers are good when you need to share the same code with multiple Functions in your domain as you would have good control over the versions.

If you have a dependency that is very large in size, you can use layers to reduce the deployment package size and also the time of deployment.

If you are building a custom runtime for Lambda Function, layers is the best way to share it.

Summary

In this article, we looked into the role of AWS Lambda layers in building the Lambda Function code. We also talked about its features, how to enable it, secure it, and apply it using SAM CLI. There are few things we need to keep in mind that this feature has to be used only in special circumstances that we discussed in this article else it may bring overhead in the maintenance of the Function code.

AWS Lambda Limits

Serverless application architecture is the cornerstone of cloud IT applications. AWS Lambda has made it possible for developers to concentrate on business logic and set aside the worry of managing server provisioning, OS patching, upgrades and other infrastructure maintenance work.

However, designing serverless applications around AWS Lambda needs special care especially finding workarounds for AWS Lambda limitations. AWS Lambda limits the amount of compute and storage resources that you can use to run and store functions. AWS has deliberately put several Lambda limits that are either soft or hard to ensure that the service is not misused in case of getting into the hands of hackers. It also provides guardrails so that you follow the best practices to design the Lambda Function.

In this article, we will take a closer look into all types of Lambda limits defined by AWS and understand how they can affect in a certain use case. Also, we will see what are the workaround and solutions available to overcome these limits for valid use cases.

AWS Lambda limitations are mostly divided into two parts - Soft limits and Hard limits

Soft limits

Soft limits are defined with default values. Lambda soft limits are per-region and can be increased by putting requests to AWS support team.

Concurrent Executions Limit

In Lambda, scaling is achieved using the concurrent execution of Lambda instances. If a Lambda execution environment cannot fulfil all the requests for a given time, it spins off another instance to handle the remaining requests. However, spinning off the new instances infinitely may cause high cost and can be misused so a default AWS Lambda limit concurrency of 1000 has been put for it.

This limit is configured at account level and shared with all the Functions in the account. Having this limit secures from the unintentional use at account level but a Function inside an account may also overuse the concurrency and affect the execution of other Function instances. We will talk about overcoming that in the best practices section.

Function and Layer Storage

When you deploy the Function on Lambda service, it uses the storage to keep the function code with dependencies. Lambda services keep the code for every version. When you update this Function with a newer version, it keeps adding the new version code in the storage.

AWS has kept the storage limit to 75 GB so ensure you follow the best practice of cleaning up the old version code. 75 GB seems to be a very high number but over the years, it may be exhausted with the frequent update in the code.

Elastic Network Interface per VPC

There are use cases where a Lambda Function needs VPC resources like RDS -mysql or so. In that case, you need to configure VPC subnet and AZs for Lambda Function. Lambda Function connects to these VPC resources through Elastic Network Interface (ENI).

Earlier, each Function instance used to need a new ENI to connect to a VPC resource so there was a chance of hitting the threshold of 250 (default configured by AWS) very easily. But, with the latest feature of Hyperplane, it has improved the VPC networking and requires less number of ENIs for the communication between a Function and VPC resources. Mostly, this threshold is not hit in most of the use cases.

Hard Limits

Hard limits are the ones that cannot be requested to AWS for the increase. These Lambda limits apply to function configuration, deployments, and execution. We will talk about a few of the important limits in detail.

AWS Lambda Memory Limit

AWS Lambda is meant for small functions to execute for short duration so AWS Lambda memory limit has been kept max to 3GB. It starts from 128 MB and can be increased with 64 MB increments.

This memory is mostly sufficient for event-driven business functions and serves the purpose. However, there are times when you need high CPU intensive or logical calculation based workload and may cause timeout errors due to not being able to complete the execution within time. There are few solutions available to overcome it and we will talk about those in the best practices section.

AWS Lambda Timeout Limit

As discussed in AWS Lambda memory limit section, a Function may timeout if it doesn’t finish the execution within the time. And, that time is 15 mins (900 seconds). This is a hard limit in which Function has to complete the execution else it will throw a timeout error.

This limit is very high for synchronous flows as by nature they are supposed to be completed within a few seconds (3-6 seconds). For asynchronous flow, you need to be careful while designing the solution and ensure each function can complete the task within this period. If it cannot, the logic can be broken into smaller Functions to complete within limits.

AWS Lambda Payload Limit

AWS has kept the payload max limit to 6 MB for synchronous flow. It means, you cannot pass more than 6 MB of data as events. So, while designing the Lambda Function, you need to ensure that consumer and downstream systems are not sending very heavy payload requests and responses respectively. If it is, then Lambda is not the correct solution for that use case.

AWS Lambda Deployment Package

AWS Lambda size limit is 50 MB when you upload the code directly to Lambda service. However, if your code deployment package size is more, you have an option to upload it on S3 and download it while triggering the Function invocation.

Another option is that you use the Lambda layers. If you use layers, then you can have max 250MB size for your package. You can add up to 5 layers for a Function. However, If you are uploading such a huge code, then there is a real problem in your design you should look into. A Function is meant for small logical code. This huge code may cause high cold start time and a latency problem.

Lambda Design Best Practices around Lambda Limits

As we have talked about most of the common Lambda limits, let’s now discuss the workarounds, tips and best practices to design Lambda Function around these limits.

Even though AWS has put the concurrent execution limit to 1000 but that is at account level. You must define the concurrency limit at Function level as well so that one Function overuse doesn’t affect the running of other Functions in the account (Bulkhead pattern).

Lambda version is a very important feature but continuous update of the Function increases the storage requirement and may hit the threshold limit (75 GB) and that you will come to know suddenly while doing the production deployment. So plan ahead with an automation script that should clean up the old versions of Function. You may decide a number (may be 5-10) of versions to support.

For a synchronous flow, keep the timeout limit very low (3-6 seconds) for functions. It ensures that resources are not clogged for a long time unnecessary and saves cost. For asynchronous flow, based on the monitoring metrics decide the average time of execution and configure the timeout with some additional buffer. While deciding timeout configuration, always keep in mind the downstream system’s capacity and SLA for the response.

For a CPU intensive logic, allocate more memory to reduce the execution time. However, just keep in mind that having more than 1.8 GB memory along with a single threaded application won’t give better performance beyond a limit. You need to design the logic to use a multi-threaded strategy to use the second core of the CPU.

For Batch processes that need more than 15 minutes of time to execute, break the logic in multiple Functions and use the Lambda Destination or Step Functions to stitch together the events.

Lambda Function has a temporary instance storage /tmp with 512 MB capacity. This will go off once execution is completed and instance is automatically stopped after a certain time period. Don’t use this capacity as Function should be designed for stateless flow.

Summary

AWS Lambda limitations are in quite high numbers but most are consciously thought through and applied. These limits are not to restrict you to use Lambda service but to protect you from the unintentional usage and DDoS type of attacks. You just need to make yourself aware of these limits and follow the best practices discussed here to get the best out of Lambda Functions.

AWS Lambda Security

The security of an application is one of the most important non-functional requirements. Every application and underneath infrastructure has to go through strict security guidelines to secure the whole system. Serverless architecture is getting more attention from the developer community, so do hackers as well and AWS Lambda is a widely used service that hosts serverless architecture applications.

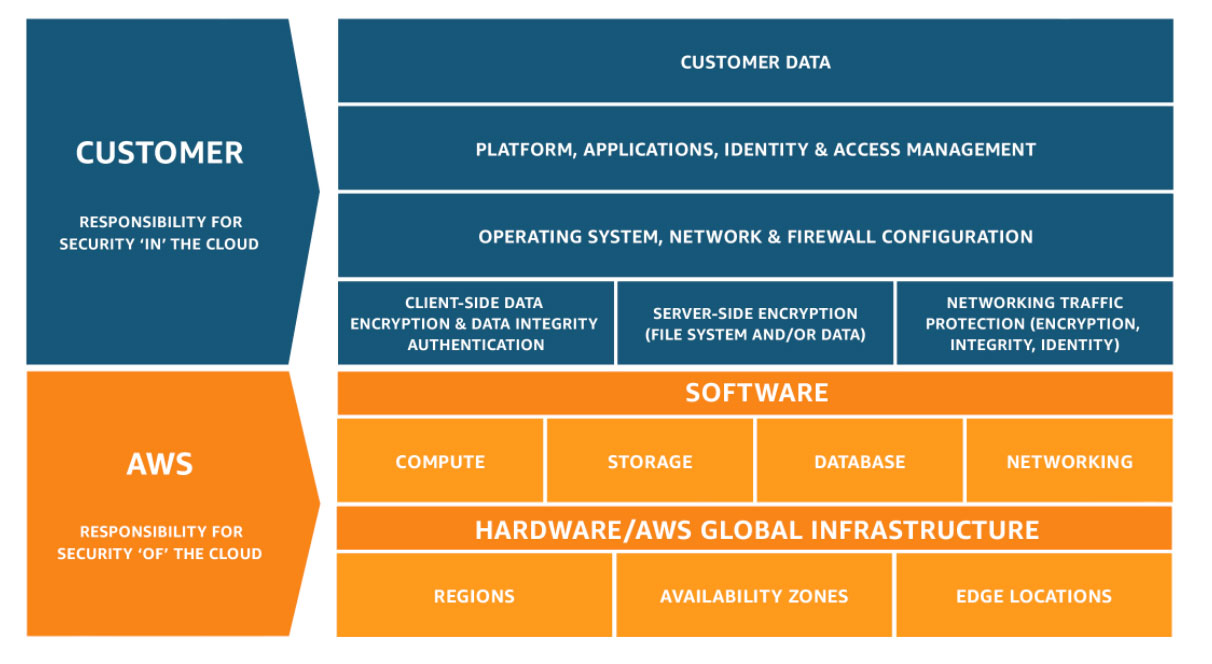

There are several myths around Lambda and serverless architecture and the most common one is that whole security for these apps relies on AWS. But that is not correct. AWS follows the shared responsibility model where AWS manages the infrastructure, foundation services, and the operating system. And the customer is responsible for the security of the code, data being used by Lambda, IAM policies to access the Lambda service.

By developing applications using a serverless architecture, you relieve yourself of the daunting task of constantly applying security patches for the underlying OS and application servers. And concentrate more on the data protection for the application.

In this article, we are going to discuss many different aspects of the security of the Lambda function.

Data Protection in AWS Lambda

As part of data protection in AWS Lambda, we first need to protect account credentials and set up the individual user accounts with IAM policies enabled. We need to ensure that each user is given the least privileges to fulfill their jobs.

Following are different ways, we can secure the data in Lambda:

Use multi-factor authentication (MFA) for authentication to each user account.

Use SSL/TLS to have communication between Lambda and other AWS resources.

Set up CloudTrail service with API and user activity logging.

Use the AWS server-side and in-transit encryption solutions, along with all default security controls within AWS services.

Never put sensitive identifying information such as account numbers, credentials of the services in the code.

Encryption in Transit

Lambda API endpoints are accessed through secure connections over HTTPS. When we manage Lambda resources with the AWS Management Console, AWS SDK, or the Lambda API, all communication is encrypted with Transport Layer Security (TLS).

When a Lambda function connects to a file system, It uses encryption in transit for all connections.

Encryption at rest

Lambda uses environment variables to store secrets. These environment variables are encrypted at rest.

There are two features available in Lambda while encrypting the environment variables:

AWS KMS keys -

For each Lambda function, we can define a KMS key to encrypt the environment variable. These keys can be either AWS managed CMKs or customer-managed CMKs.

Encryption helpers -

By enabling this feature, environment variables are encrypted at the client-side even before sending it to Lambda. This ensures secrets are not displayed unencrypted on AWS Lambda console or in CLI or through API.

Lambda always encrypts files that are uploaded to Lambda, including deployment packages and layer archives.

Amazon CloudWatch Logs and AWS X-Ray used for logging, tracing, and monitoring logs also encrypt data by default and can be configured to use a CMK.

IAM Management for AWS Lambda

IAM management in AWS typically handles users, groups, roles, and policies. For a new account by default, IAM users and roles don't have permission for Lambda resources. An IAM administrator must first create IAM policies that grant users and roles permission to perform specific API operations on the Lambda and other AWS services. The administrator must then attach those policies to the IAM users or groups that require those permissions.

There are a few best practices to handle the IAM policies:

AWS has already created many managed policies for the Lambda function. So to start quickly attach these policies to the users.

Start with the least privileges rather than being too lenient initially and trying to tighten them later.

For sensitive operations, enable multi-factor authentication (MFA).

Use Policy conditions to enhance security. For example - allow a request to come only from a range of IP addresses or allow a request to come only within a specified date or time range.

Auto-Generate Least-Privileged IAM Roles

An open-source tool for AWS Lambda security is available that automatically generates AWS IAM roles with the least privileges required by your functions. The tool:

Saves time by automatically creating IAM roles for the function

Reduces the attack surface of Lambda functions

Helps create least-privileged roles with the minimum required permissions

Supports Node.js and Python runtimes for now.

Supports Lambda, Kinesis, KMS, S3, SES, SNS, DynamoDB, and Step Functions services for now.

Works with the serverless framework

Logging and Monitoring for AWS Lambda

In AWS, we have two logging tools relevant to watch for security incidents in AWS Lambda: Amazon CloudWatch and AWS CloudTrail.

For Lambda security, CloudWatch should be used to:

Monitor “concurrent executions” metrics for a function. Investigate the spikes in AWS Lambda concurrent executions on a regular basis.

Monitor Lambda throttling metrics

Monitor AWS Lambda error metrics. If you observe a spike in timeouts, it may indicate a DDoS attack

When we enable data event logging, CloudTrail logs function invocations and we can view the identities invoking the functions and their frequency. Each invocation of the function is logged in CloudTrail with a timestamp. This helps to verify the source caller.

One of the most significant benefits of enabling CloudWatch and CloudTrail for your AWS Lambda serverless functions comes from the built-in automation. Notifications, messages, and alerts can be set up that are triggered by events in your AWS ecosystem. These alerts enable you to react to potential security risks as soon as they are introduced.

Securing APIs with API Gateway

AWS API Gateway along with AWS Lambda enables us to build secure APIs with a serverless architecture. With this, we can run a fully managed REST API that integrates with AWS Lambda functions to execute business logic.

Following are controls that can be used to control access to APIs:

Generate API keys and use it with usage plans with usage quota limiting

Use AWS IAM roles and policies to grant access to user

Use Cognito user pools to enable authentication. It has features to authenticate using third party providers like Facebook, Twitter, GitHub, etc..

Use Lambda authorizer functions for controlling access at API methods levels. It can be done using token authentication as well as header data, query string parameters, URL paths, or stage variables.

Summary

Serverless architecture takes away a lot of pain in operation management. This also offloads the onus of patching OS and other infrastructure levels of security concerns. However, it opens new vectors for attacking like events injection and many others which are not known yet. But security basics remain the same and application and data-level security have to be enabled and monitored regularly to avoid any security attacks.

Total Pageviews

Labels

Follow Me

Popular Posts

Blog Archive

-

►

2020

(10)

- ► October 2020 (1)

- ► September 2020 (1)

- ► April 2020 (1)

- ► March 2020 (1)

- ► January 2020 (2)

-

►

2019

(24)

- ► December 2019 (5)

- ► November 2019 (1)

- ► August 2019 (2)

- ► April 2019 (1)

- ► March 2019 (1)

- ► February 2019 (3)

- ► January 2019 (4)

-

►

2018

(14)

- ► December 2018 (3)

- ► November 2018 (11)